Robots.txt is a text file that guides search engines’ crawlability and indexing of your website. This file is located in the root directory of your website and is usually accessible at “www.examplesite.com/robots.txt.” The Robots.txt file is used to specify which pages of your website can be crawled by search engines and which cannot.

How Does Robots.txt Work?

The Robots.txt file provides directives to search engine bots that visit your website. These bots crawl your website’s content and decide whether to index or not index pages according to these instructions. It allows you to hide specific sections or content of your website from search engines. Additionally, you can also block search engines altogether.

Essential Components of a Robots.txt File

A Robots.txt file primarily consists of two main sections: the “User-agent” and “Disallow” directives (and sometimes the “Allow” directive).

User-agent: Specifies which search engine bots the directives apply to. Generally, an asterisk (*) indicates all search engines, but you can target a specific search engine using its User-agent name. For example, to target Google, you can use “User-agent: Googlebot.”

Disallow: Specifies the folders or pages you do not want to be crawled. The “/” symbol represents the root directory. For example, the directive “Disallow: /secret/” prevents the “www.examplesite.com/secret/” folder and its contents from being crawled.

Allow: Allows specific folders or pages to be crawled. It is beneficial when you want to allow certain pages to be crawled within an otherwise disallowed directory. For example, “Allow: /public/” permits crawling of the “/public/” folder.

Example of a Robots.txt File

Below is an example structure illustrating what a basic robots.txt file might look like:

User-agent: *

Disallow: /secret/

Allow: /public/

This example file prevents all search engines (User-agent: *) from crawling the “/secret/” folder and its contents while allowing the “/public/” folder to be crawled.

Points to Consider

The Robots.txt file should be located in the root directory of your website.

The file name must strictly be “robots.txt.”

An incorrectly configured or incomplete Robots.txt file can prevent search engines from properly indexing your website. Therefore, it is essential to be careful and precise.

Conversely, a misconfigured Robots.txt file may allow the indexing of content you do not wish to keep private.

The Robots.txt file is a powerful tool for controlling how search engines crawl your website. By carefully creating and managing this file, you can increase your website’s visibility and protect it from unwanted crawling.

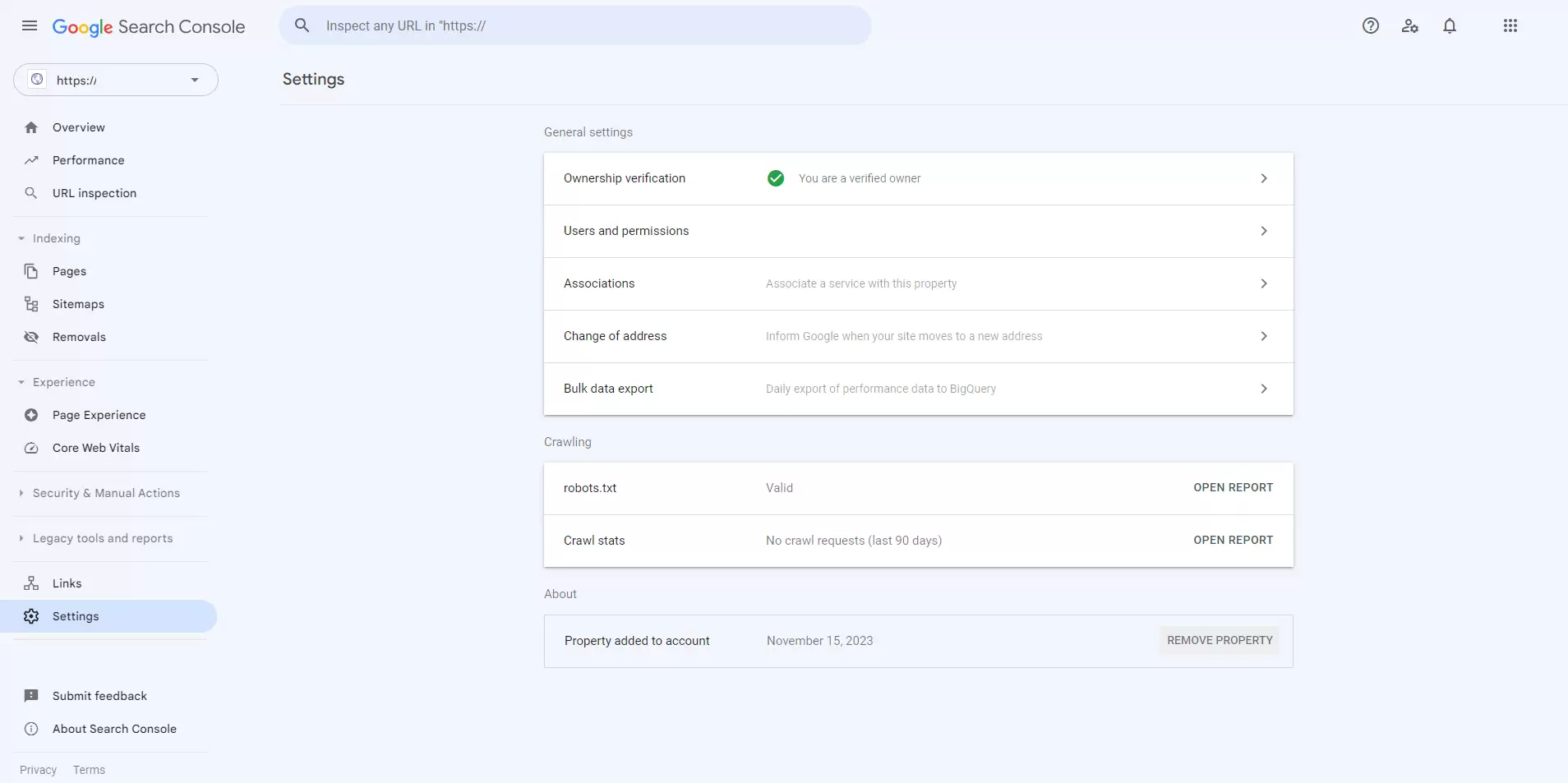

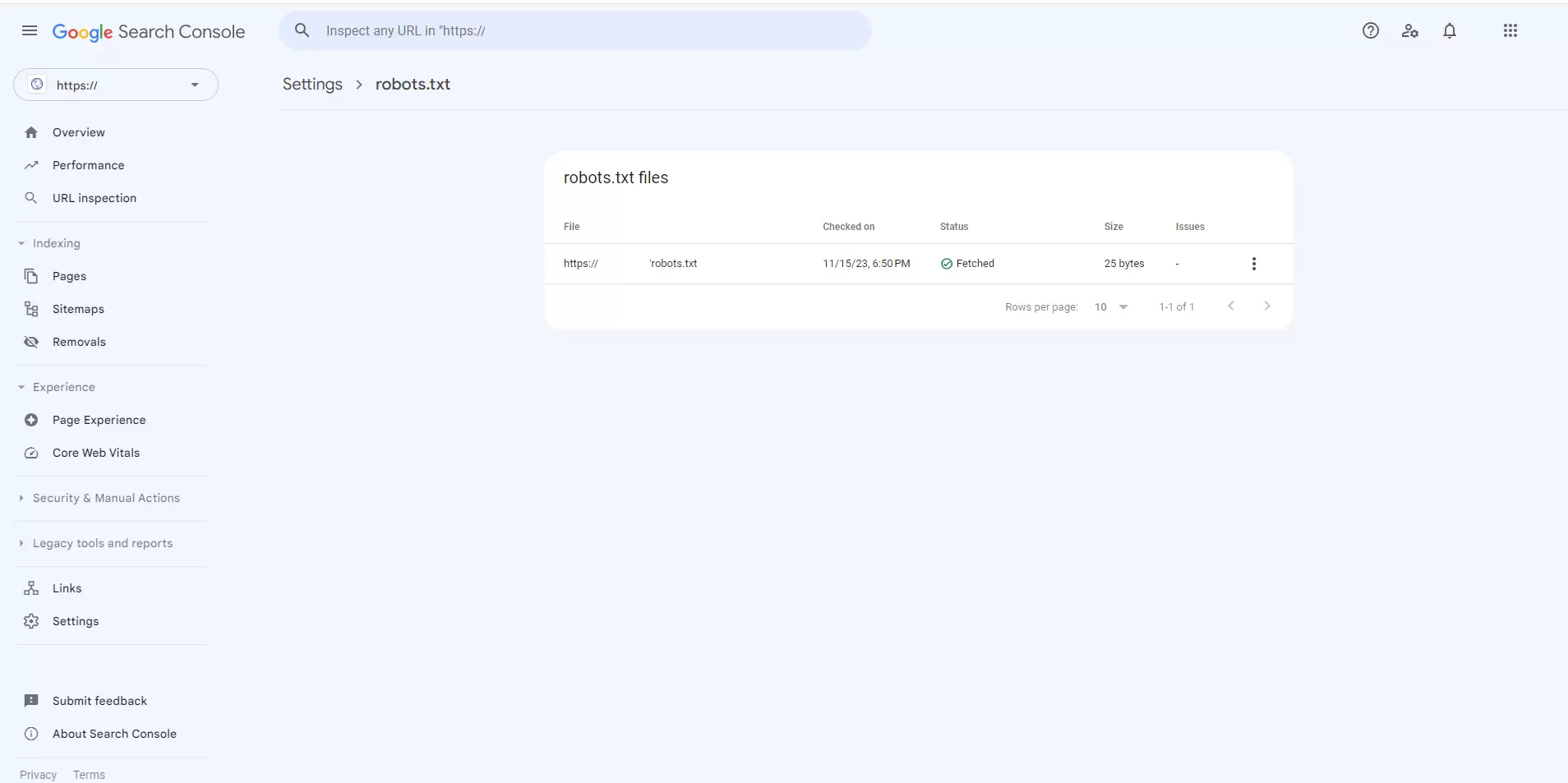

For more detailed information about Robots.txt, you can visit the Google Help Center.

Robots.txt Creation Tools:

Robots.txt Generator:

Various online tools can help users quickly create Robots.txt files, which allow users to block specific directories or pages.